L1: The coulumn space of A#

We hope you already know some linear algebra. It is a beautiful subject—more useful to more people than calculus (in our quiet opinion).

—Gilbert Strang

The Prof. Stang nicely put the free book online, you can take a look at here. Online video for L1 is here. Thank you professor!

For convinence, I will just paste screenshot of the lecture note here when in necessity.

Show code cell source

from IPython.display import Image

Image("./imgs/c1-p1.png")

Note

This website give visualization of matrix multiplication. Check it out, it’s awesome!

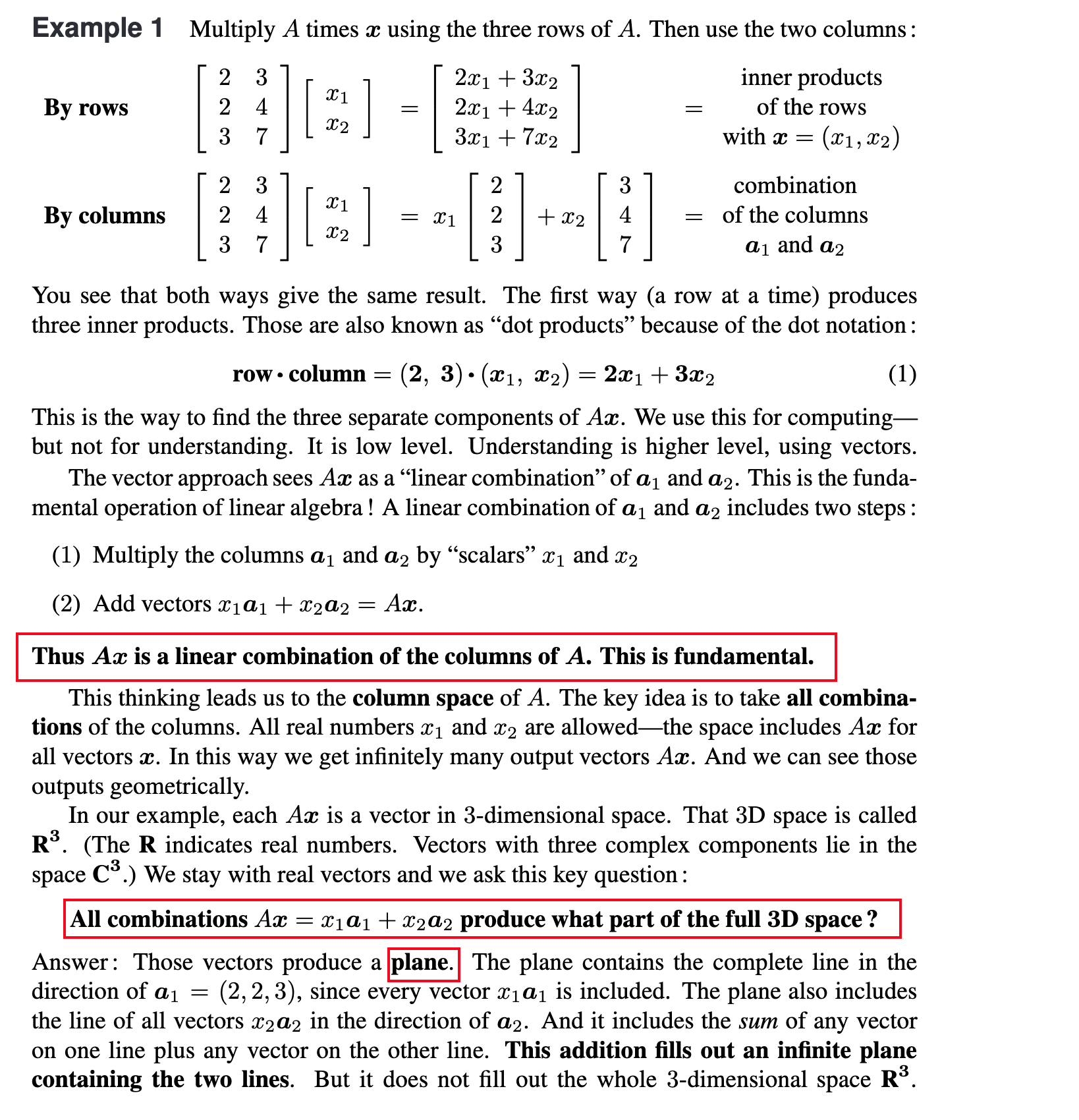

Conclusiion 1#

There are two ways to compute the product of matrix and a vector. The second way is very important. Thus multiplication can be considered as the linear combination of the columns of the matrix \(A\), where \(A\) is a \(n\times d\) matrix and \(n\geq d\).

Conclusion 2#

The column space of \(A\) is the set of all vectors \(Ax\) for all \(x\) in \(\mathbb{R}^n\). Since there are two columns and they are not in the same direction, thus the column space is a plane.

Conclusion 3#

The vectors \(y\) that is orthogonal to the column space of \(A\) satistfies \(A^Ty=0\), where \(y\in\mathbb{R}^n\).

Important

If you read the book of Elements of statistical learning, in Section 3, Eq (3.5) is the equation for parameter \(\beta\), i.e., \(X^T(Y-X\beta)=0\), where \(Y\in\mathbb{R}^n\), \(X\) is a \(N\times (d+1)\) matrix, and \(\beta\in\mathbb{R}^{d+1}\). The equation implies the residual \(Y-X\beta\) is orthogonal to the column space of \(X\).

Conclusion 4#

If \(Ax=b\) where \(b=(b1,b2,...,b_n)\) has a solution \(x\), then \(b\) is in the column space. Contrariwise, if there is no solution, then \(b\) is not in the column space.

Fun Fact#

The column space of matrix

The matrix \(A\) with the size of \(3\times 3\) is invertible if the columns are independent.

If the only solution of \(Ax=0\) is \(x=0\), then the columns of \(A\) are independent. Thus, \(Ax=b\) always has only one solution.

Basis#

A basis for a subspace is a full set of independent vectors. All vectos in the subspace are the conbination of the basis vectors.

Given a \(n\times d\) matrix \(A\) whose columns spans a subspace \(S\). We can find the basis of \(S\) by this ways:

If column 1 of \(A\) is not all zeroy put it into the matrix \(C\) .

If column2 of \(A\) is not a multiple of column le put it into \(C\).

If column 3 of \(A\) is nota combination of columns 1 and 2, put it into \(C\). \(Continue\).

Finnaly, we get a matrix \(C\) with the size of \(r\times d\) where \(r\) is the rank of \(A\). \(r\) is also the number of independent columns of \(A\).

The rank is defined as the number of independent coluimns of \(A\), also the dimension of \(A\).

Conclusion 5#

The number of indepdent rows of \(A\) is the same as the number of independent columns of \(A\). Thus, the rank of columns is same as the rank of rows.

Problem set#

1.4: Suppose \(A\) is the 3 by 3 matrix ones(3, 3) of all ones. Find two independent vectors \(x\) and \(y\) that solve \(Ax=0\) and \(A y=0\). Write that first equation \(Ax= O\) (with numbers) as a combination of the columns of A. Why don’t I ask for a third independent vectorwith \(Az=0\)?

If \(Ax=0\) then every vector that depdent on \(x\) have \(A\bar{x}=0\).

If we aslo have \(Ay=0\), then every combination of \(x\) and \(y\) has \(A\bar{x}+A\bar{y}=A(\bar{x}+\bar{y})=0\).

If we can find another independent vector \(z\) that \(Az=0\), the very vector in the \(\mathbb{R}^3\) space have \(Aw=0\) which is not true.

Important

The dimension of nullspace = The number of columns - the dimension of column space