L4: Eigenvectors and Eigenvalues#

As I said before, Eigenvectors are thoes vectors that do not change direction when a linear transformation \(A\) is applied to them. Formally, the eigenvector \(x\) of \(A\) is a vector such that \(Ax = \lambda x\) for some scalar \(\lambda\). If we allied the \(A\) to \(Av\) again, what will happen? The \(x\) will be scaled again, but the direction maintains. Thus, \(A^2x=\lambda^2x\).

Important

If \(A\) is \(n\times n\) matrix with \(n\) indepdent eigenvectors, then any vector \(v\) can be considered as the linear combination of eigenvectors with some coefficients \(c\in\mathbb{R}^n\), i.e., \(v=Xc\). Thus,

Four properties of eigenvectors#

Show code cell source

from IPython.display import Image

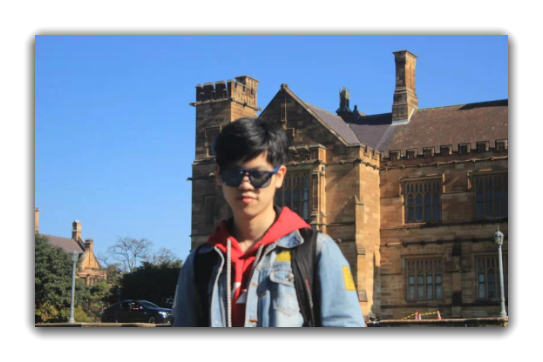

Image('imgs/l4-m1.png')

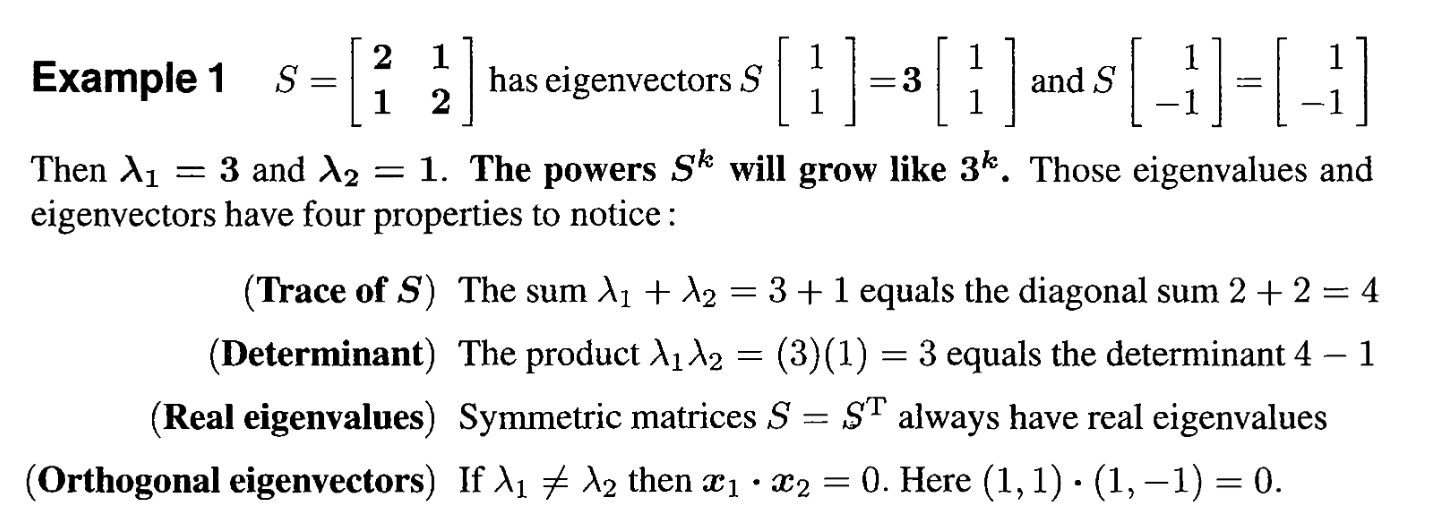

For the determinant party, recall the definition of the determinant of a matrix \(A\), I will just show you a beautiful picture from 3blue1brown.

Show code cell source

from IPython.display import Image

Image('imgs/l4-m2.png', width=800)

Four more conlusions about eigenvectors#

Show code cell source

from IPython.display import Image

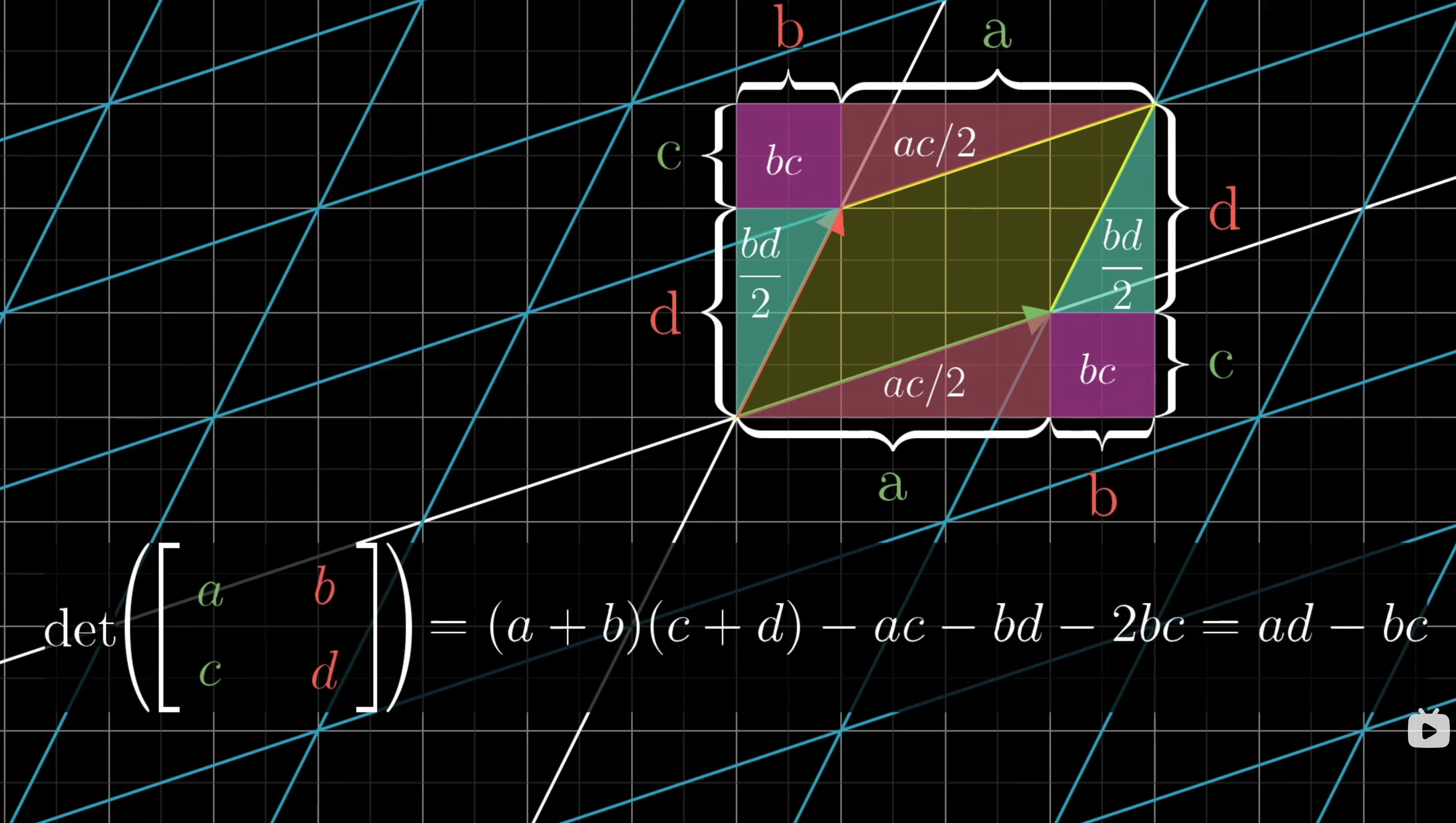

Image('imgs/l4-m3.png', width=800)

The last one tells us an important fact that only the real symmetric matrix has orthogonal eigenvectors. In fact, I am not sure that in what kind of case that a asymmetric matrix \(A\) also satisfies the \(A^TA=AA^T\).

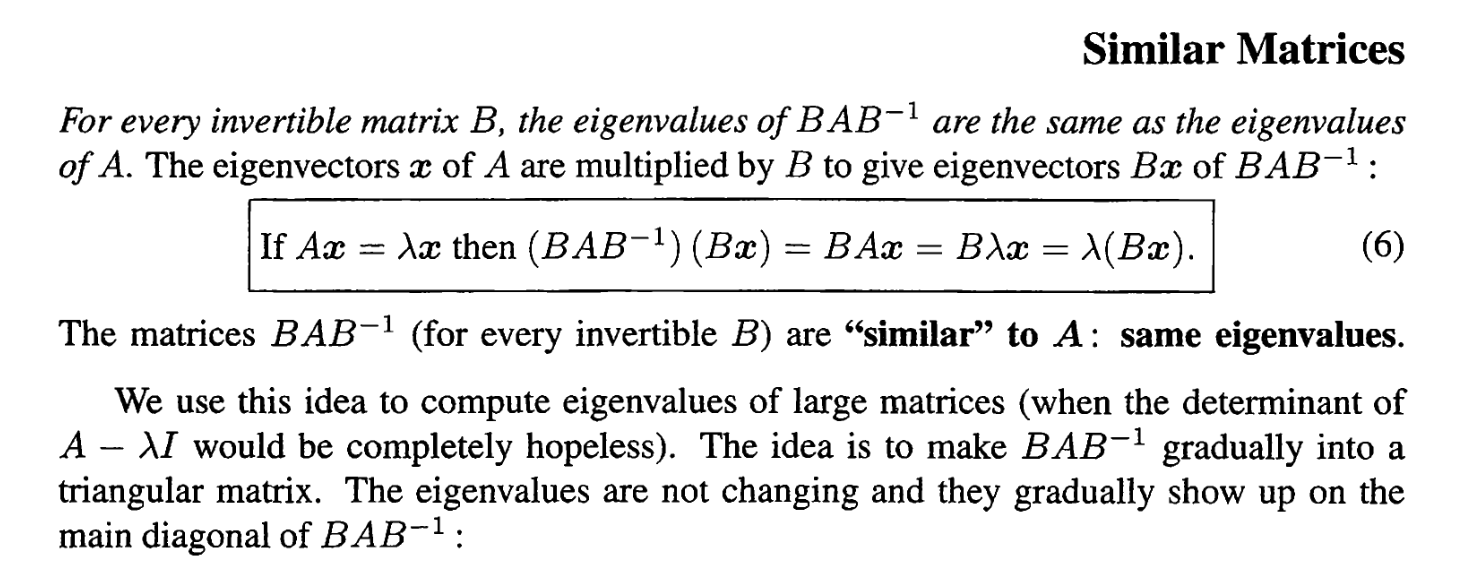

Conclusion3: similar matrices have the same eigenvalues#

Show code cell source

Image('imgs/l4-m4.png', width=800)

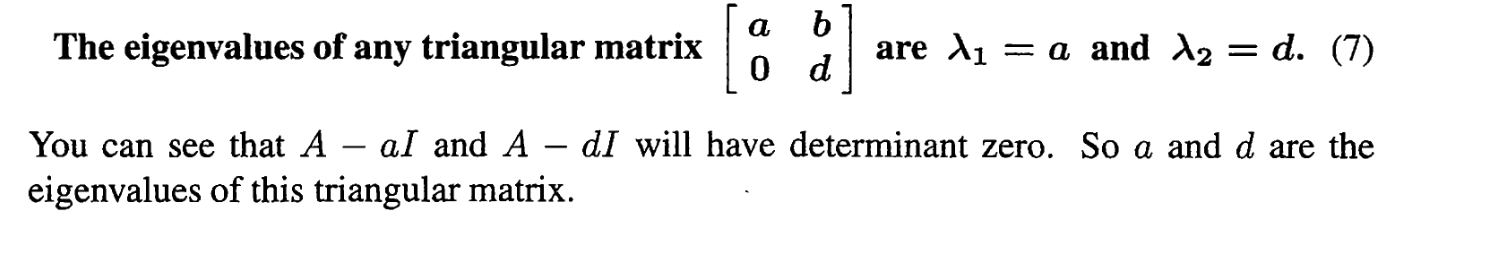

Conclusion4: the diagonal of the triangular matrix is the eigenvalues#

Show code cell source

Image('imgs/l4-m5.png', width=800)

Conclusion5: diagonalization can fasten the computation of power#

Assume a matrix \(A\) has \(n\) independent eigenvectors. Then, we can diagomialize it as \(A=X\Lambda X^{-1}\). Thus,

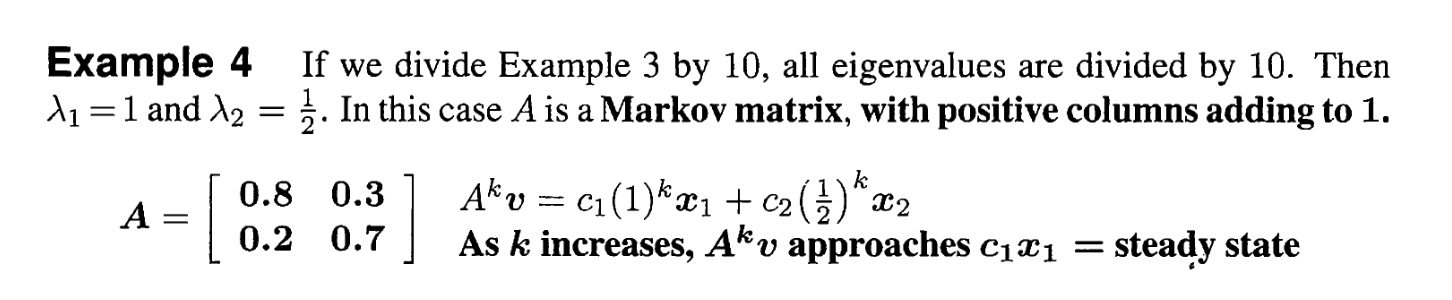

Conclusion6: markov matrix will be stable after a long time

Show code cell source

Image('imgs/l4-m6.png', width=800)

For the introduction of Multiplicity#

Refer to the Section 1.6 of the book.